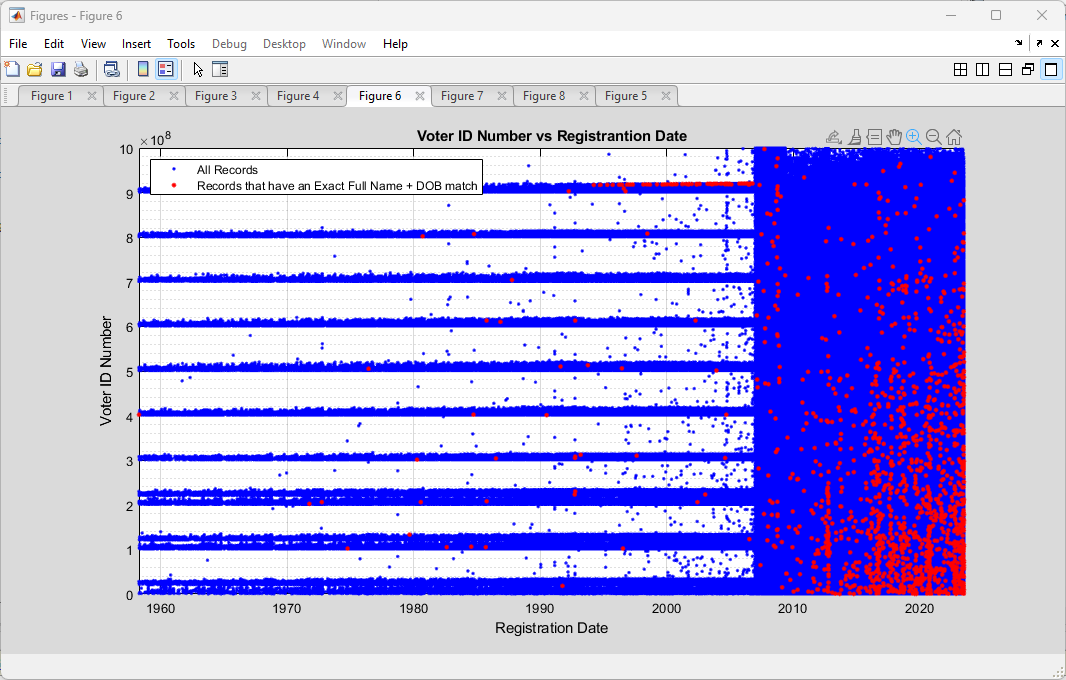

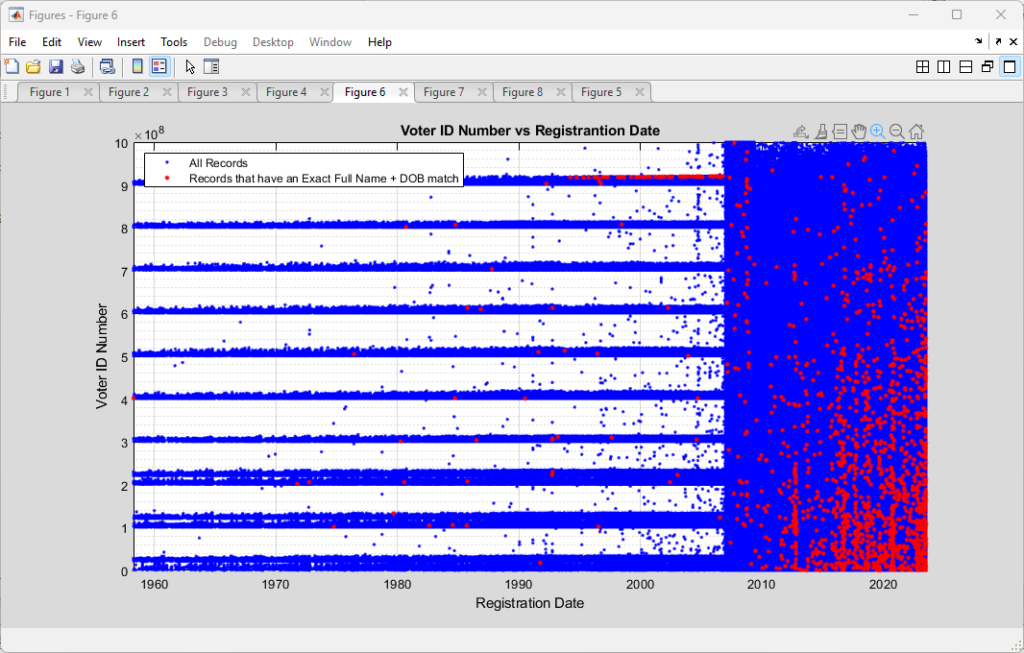

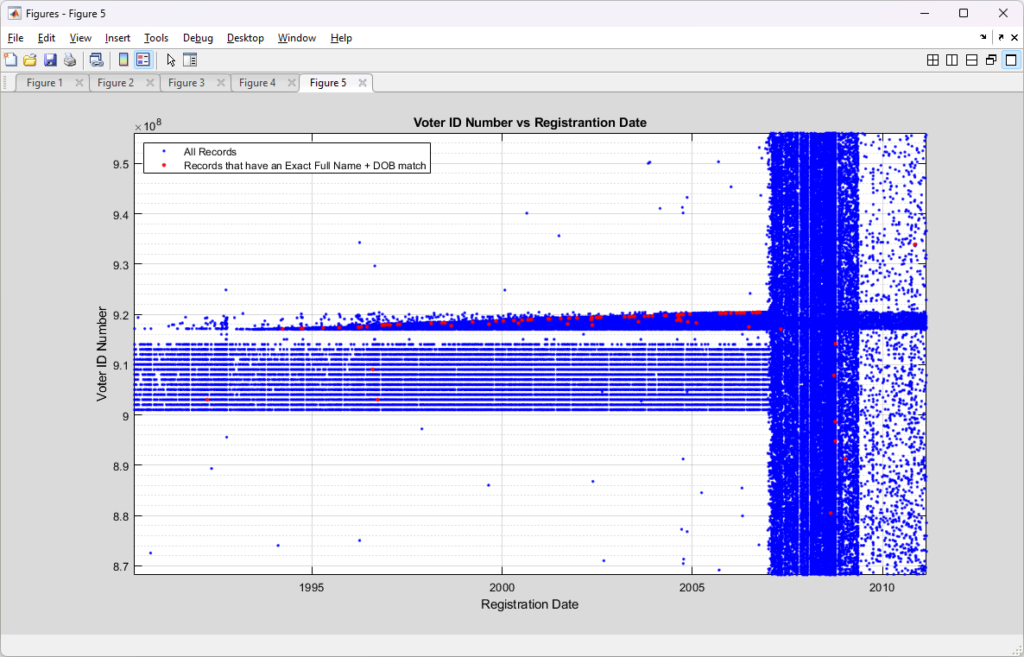

I previously had put together analysis that utilized the full name and date of birth information from the Virginia Registered Voter List (“RVL”) in order to look for duplicate registrations, either exact matches or by using a string distance measure (the Levenshtein distance) to accommodate for typos, abbreviations, and mis-spellings.

Just prior to the start of early voting in the 2024 November General Election, we were notified that the department of elections (“ELECT”) was removing the full date of birth from the data we purchase. This removal of the full date of birth increased the number of false positive in our duplication detection scripts. Our organization, as well as others, were ready to go to court to compel ELECT to reinstate the data. (Link to our notice of violation letter is here).

Happily, we ended up not having to go to court as ELECT decided to reinstate the data earlier this year (~May timeframe), which means we can resume our computation and detections of potentially duplicate entries again with much more reliable results. The results below mirror our previous analysis, but with the new updated data.

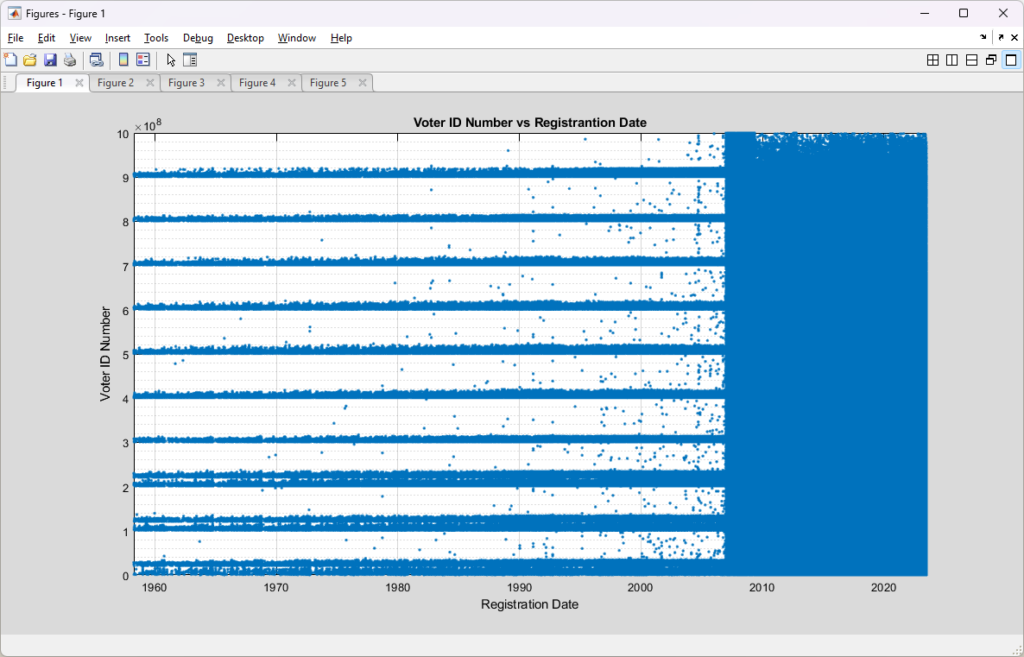

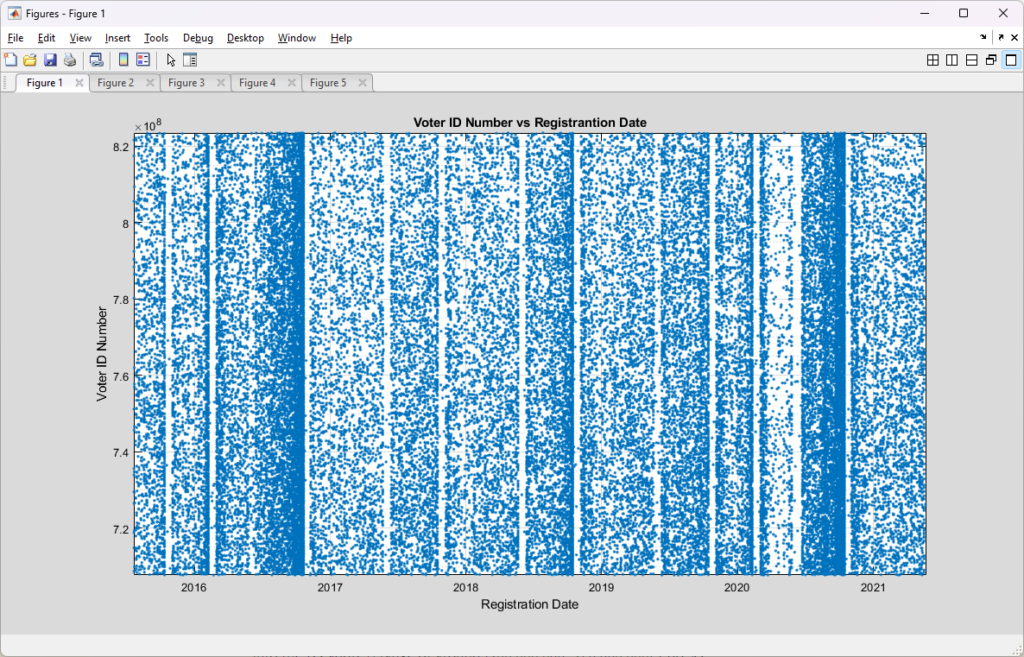

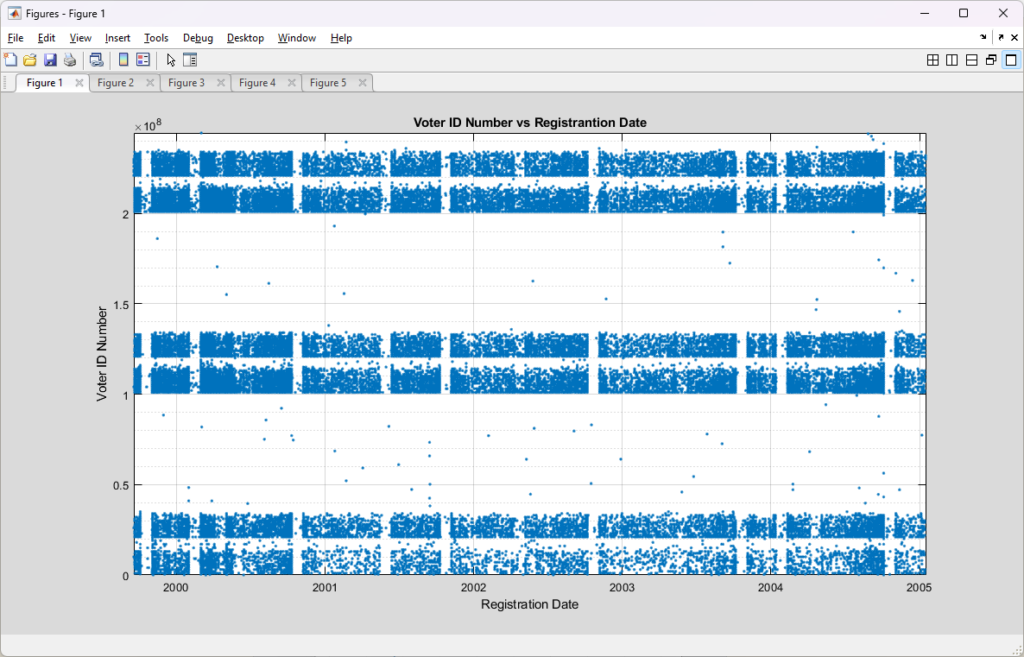

Using the latest Registered Voter List (RVL) and Voter History List (VHL) data purchased directly from the VA Department of Elections (ELECT) I wrote up an analysis script to check for potentially duplicated registrant records in the RVL and cross reference duplicate pairings with the VHL to identify potential duplicate votes. The details are summarized below.

Please note that I will not publish voter Personally Identifiable Information (PII) on this blog. I have substituted fictitious, but representative, PII information for all examples given below, and cryptographically hashed all voter information in the downloadable results file. I will make available the detailed information to those that have the authorization to receive and process voter data upon request (contact us).

Summary of Results:

As a baseline, there were 5,514 (as compared to 6,464 in the previous May 27, 2023 posting) records for STATUS=’Active’ registrants that adhered to the definition of a “duplicate” when Social Security Number (SSN) is not available, as defined by the MOU between DMV and ELECT (section 7.3) of having the same First Name + Last Name + Full Date of Birth (DOB). It should be noted that most records held by DMV and ELECT have a SSN associated with them (or at least they should). SSN information is not distributed as part of the data purchased by us from ELECT, however, so this is the appropriate standard baseline for this work.

Upgrading our definition of a potential duplicate to [First + Middle + Last + Suffix + DOB] and using a LevenshteinDistance=0 (meaning an exact match) drops the number of potential duplicates to 1,062 (1,982 previously), with each identified registrant in a pair having an exactly matching string result and unique voter ID numbers.

According to my derivations and simulations that are described in detail here, we should only expect to see an average of 11 (+/- 3) potential duplicate pairs (a.k.a. “collisions”) at a distance of 0. This is over two orders of magnitude different than what we observe in the compiled results. Such a discrepancy deserves further investigation and verification.

Allowing for a single string difference by setting LevenshteinDistance<=1 increases the pool of potential duplicates to 4,572 (5,568 previously). While this relaxation of the filter does allow us to find certain issues (described below) it also increases our chances of finding false positives as well. The LD metric results should not be viewed as a final determination, but as simply a useful tool to make an initial pass through the data and find candidate matches that still require further review, verification and validation.

Increasing to LevenshteinDistance<=2 brings the number of potential duplicates up to 27,178 (32,610 previously). When we increase to LD <= 3 we get an explosion of 158,940 (183,130 previously) potential duplicates.

It should be noted that compared to our last full analysis (May 2023) the dept of elections has reduced the number of exact duplicates by about 45%, and by approximately 13-15% for the other inexact categories.

Method:

For every entry in the latest RVL, I performed a string distance comparison, based on Levenshtein distance, between every possible pair of strings of (FIRST NAME + MIDDLE NAME + LAST NAME + SUFFIX + FULL DOB). For the ~6M+ different RVL entries, we therefore need to compute ~3.8 x 10^13 different string comparisons, and each string comparison can require upwards of 75 x 75 individual character comparisons, meaning the total number of character operations is on the order of 202.5 Quadrillion, not including logging and I/O.

A distance of 0 indicates the strings being compared are identical, a distance of 1 indicates that there a single character can be changed, inserted or removed that would convert one string into the other. A distance of 2 indicates that 2 modifications are required, etc.

Example: The string pair of “ALISHA” –> “ALISHIA” has an LD of 1, corresponding to the addition of an “I” before the final “A”.

I aggregated all of the Levenshtein distance pairings that were less than or equal to 3 characters different in order to identify potential (key word) duplicated registrants, and additionally for each pairing looked at the voter history information for each registrant in the pair to determine if there was a potential (again … key word) for multiple ballots to be cast by the same person in any given election. As we allow for more characters to be different, we potentially are including many more likely false positive matches, even if we are catching more true positives.

For example: At a distance of 4 the strings of “Dave Joseph Smith M 10/01/1981” and “Tony Joseph Smith M 10/01/1981” at the same address would produce a potential match, but so would “Davey Joseph Smith M 10/01/1981” and “David Josiph Smith M 10/02/1981”. The first pair is more likely to be a false positive due to twins, while the second is more likely to be due to typo’s, mistakes, or use of nicknames and might warrant further investigation. A much stronger potential match would be something like “David Josiph Smith M 10/01/1981” and “David Joseph Smith M 10/01/1981”, with a distance of 1 at the same address. In an attempt to limit false positives, I have clamped the distance checks to <= 3 in this analysis.

Note that the Levenshtein distance measure is importantly able to identify potential insertions or deletions as well as character changes, which is an improvement over the Hamming distance measure. This is exampled by the following pairing: “David Joseph Smith M 10/01/1981” and “Dave Joseph Smith M 10/01/1981”. The change from “id” to “e” in the first name adds/subtracts a character making the rest of the characters in the remainder of the string shift position. A Levenshtein metric would correctly return a small distance of 2, whereas the hamming distance returns 27.

Also note that with the official records obtained from ELECT, and in accordance with the laws of VA, I do not have access to the social security number or drivers license numbers for each registration record, which would help in identifying and discriminating potential duplicate errors vs things like twins, etc. I only have the first name, middle name, last name, suffix, month of birth, day of birth, year of birth, gender, and address information that I can work with. I can therefore only take things so far before someone else (with investigative authority and ability to access those other fields) would need to step in and confirm and validate these findings.

Results:

The summary totals are as follows, with detailed examples.

| DMV_ELECT MOU Standard | LD <= 0 | LD <= 1 | LD <= 2 | LD <= 3 | |

| Number of Potential Duplicate Registrant Pairs | 6,108 | 1,250 | 5,116 | 29,480 | 170,772 |

| Number of Potential Duplicate Registrant Pairs (Active Only) | 5,514 | 1,062 | 4,572 | 27,178 | 158,940 |

| Number of Potential Duplicate Ballots | 2,856 | 58 | 1,580 | 16,984 | 109,428 |

| Number of Potential Duplicate Ballots (Active Only) | 2,770 | 54 | 1,552 | 16,410 | 105,932 |

Examples of Types of Issues Observed:

NOTE THE BELOW INFORMATION HAS HAD THE VOTER PERSONALLY IDENTIFIABLE INFORMATION (“PII”) FICTIONALIZED. WHILE THESE ARE BASED ON REAL DATA TO ILLUSTRATE THE DIFFERENT TYPES OF OBSERVATIONS, THEY DO NOT REPRESENT REAL VOTER INFORMATION.

Example #1: The following set of records has the exact match (distance = 0) of full name and full birthdate (including year), but different address and different voter ID numbers AND there was a vote cast from each of those unique voter ID’s in the 2020 General Election. While it’s remotely possible that two individuals share the exact same name, month, day and year of birth … it is probabilistically unlikely (see here), and should warrant further scrutiny.

Voter Record A:

AMY BETH McVOTER 12/05/1970 F 12345 CITIZEN CT

Voter Record B:

AMY BETH McVOTER 12/05/1970 F 5678 McPUBLIC DR

Example #2: This set of records has a single character different (distance of 1) in their first name, but middle name, last name, birthdate and address are identical AND both records are associated with votes that were cast in the 2020, 2021, and 2022 November General Elections. While it is possible that this is a pair of 23 year old twins (with same middle names) that live together, it at least bears looking into.

Voter Record A:

TAYLOR DAVID VOTER 02/16/2000 M 6543 OVERLOOK AVE NW

Voter Record B:

DAYLOR DAVID VOTER 02/16/2000 M 6543 OVERLOOK AVE NW

Example #3: This set of records has two characters different (distance of 2) in their birthdate, but name and address are identical AND the birth years are too close together for a child/parent relationship, AND both records are associated with votes that were cast in the 2020 and 2022 November General Elections.

Voter Record A:

REGINA DESEREE MACGUFFIN 02/05/1973 F 123 POPE AVE

Voter Record B:

REGINA DESEREE MACGUFFIN 03/07/1973 F 123 POPE AVE

Example #4: This set of records has again a single character different (distance of 1) in the first name (but not the first letter this time) and the last name, birthdate and address are identical. There were also multiple votes cast in the 2019 and 2022 November General from these registrants.

Voter Record A:

EDGARD JOHNSON 10/19/1981 M 5498 PAGELAND BLVD

Voter Record B:

EDUARD JOHNSON 10/19/1981 M 5498 PAGELAND BLVD

Example #5: This set of records has two characters different (distance of 2) in the first and middle names and the last name, birthdate, gender and address are identical. There were also multiple votes cast in the 2021 and 2022 November General from these registrants. Again it is possible that these records represent a set of twins given the information that ELECT provides.

Voter Record A:

ALANA JAVETTE THOMPSON 01/01/2003 F 123 CHARITY LN

Voter Record B:

ALAYA YAVETTE THOMPSON 01/01/2003 F 123 CHARITY LN

Example #6: The following set of records has the exact match (Distance = 0) of full name and full birthdate (including year), and same address but different voter ID numbers. There was no duplicated votes in the same election detected between the two ID numbers.

Voter Record A:

JAMES TIBERIUS KIRK 03/22/2223 M 1701 Enterprise Bridge

Voter Record B:

JAMES TIBERIUS KIRK 03/22/2223 M 1701 Enterprise Bridge

Example #7: The following set of records has the exact match (distance = 0) of full name and full birthdate (including year), same address but different gender and voter ID numbers. There was no duplicated votes in the same election detected between the two ID numbers.

Voter Record A:

MAXWELL QUAID CLINGER 11/03/2004 M 4077 MASH DR

Voter Record B:

MAXWELL QUAID CLINGER 11/03/2004 U 4077 MASH DR

Example #8: The following set of records has a single punctuation character different, with the same address but different voter ID numbers. There was no duplicated votes in the same election detected between the two ID numbers.

Voter Record A:

JOHN JACOB JINGLHIEMER-SCHMIDT 06/29/1997 M 12345 JACOBS RD

Voter Record B:

JOHN JACOB JINGLHIEMER SCHMIDT 06/29/1997 M 12345 JACOBS RD

Results Dataset:

A full version of the aggregated excel data is provided below, however all voter information (ID, first name, middle name, last name, dob, gender, address) have been removed and replaced by a one-way hash number, with randomized salt, based on the voter [First Name, Middle Name, Last Name, Suffix, and DOB]. The full file with specific voter information can be provided to parties authorized by ELECT to receive and process voter information, Election Officials, or Law Enforcement upon request.